If you install Node-RED on your Android phone using RedMobile (not available for iPhone), you can easily access the phone’s resources, including all the sensors, GPS, camera, text-to-speech, speech-to-text, and AI models. The image AI on Android can be used to process images other than those from the phone’s camera. By pushing images from, for example, an Axis Camera, you can obtain classification labels from the phone. You receive a label and confidence level. Some labels I receive from my home cameras are Vehicle, Car, Building, Sky, Plant, Road, Garden, Stairs, Asphalt, Ice Bumper, and Soil. I do not have the complete list of what could be classified, and it may vary between different phone models.

To Replicate:

- Install and start RedMobile on your Android device.

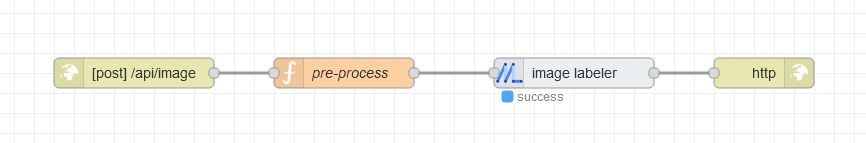

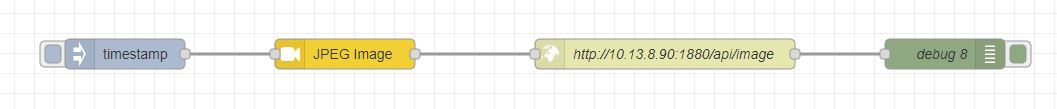

- Create a flow using an HTTP endpoint that clients can use to push images and obtain classification labels. I recommend using your PC web browser to access the phone’s Node-RED at

http://phone-ip:1880/red.

- On a different Node-RED (hosted on a RasPi, computer, or camera), create a flow that captures an image and sends it to the phone for analysis.

Is this practical and useful in a production system? I don’t know yet. But it is definitely a cool thing to play with. One valuable use case I have solved is a Phone Node-RED Dashboard that can control and monitor my system. I use the speech-to-text to send commands to my system and text-to-speech for system voice notifications. It works even when the phone is idle in my pocket. I can hear the voice notifications. The speech-to-text works fast and reliable without needing to sign up to some costly or complicated web service. With Tailscale on my Phone I have constant secure connection to my system even when I am remote on 4G. If I were to have tried to build my own Android application, it would have taken me weeks to delve into Android SDK development. With Node-RED, I solved the same thing in a few hours.